- Why Host Your Own Flux Model

- How To Set Up Flux

- Customizing Your AI Model

- Prompt Engineering For Flux

Why Host Your Own Flux Model

Hosting your own AI model offers significant benefits, particularly for users who demand fine control over image generation, privacy, and customisation. Unlike public cloud-based APIs, which may impose usage limitations or fees, self-hosting gives you full control over how the model operates.

You can adjust hardware configurations, implement optimised workflows, and ensure that your data remains private.

Flux is a text-to-image generative AI which is released with 3 models:

- FLUX.1 Pro The highest performing model, not available to self-host.

- FLUX.1 Dev An efficient, open weight model for non-commercial use, distilled from the Pro version. Available for self-hosting.

- FLUX.1 Schnell The fastest model, designed for local development and personal use, with open-source access under an Apache 2.0 licence. Ideal for quick experimentation.

These cater to a range of needs, from professional grade image generation to fast, local development.

By hosting Flux on your own infrastructure, you can achieve lower latency, improve performance, and integrate your customised AI model into your existing systems seamlessly. The most compelling advantage is the ability to experiment with cutting edge AI in a more flexible and cost effective way.

How To Set Up Flux

Setting up Flux for generative AI involves installing python, the required dependencies, and configuring the flux environment.

There are detailed instructions in the Github repo: https://github.com/black-forest-labs/flux

The open weight variants of Flux, such as the Dev and Schnell versions, are available for self-hosting under non-commercial licences.

I found this process easier on a linux machine, windows subsystem for linux may work too but I’m not sure about performance through the VM. Macs should be fine I expect but haven’t tested.

This is the process:

- Clone the Flux repository

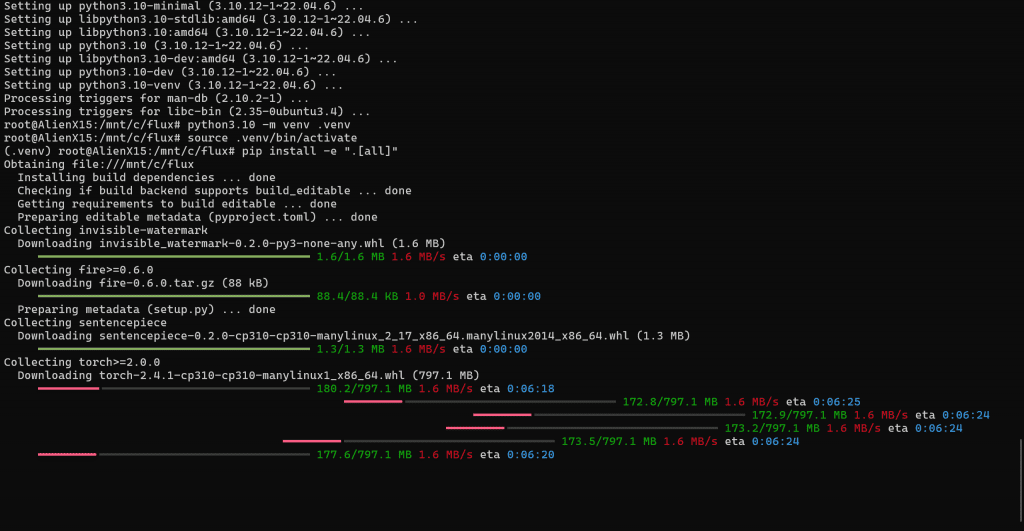

git clone https://github.com/black-forest-labs/flux cd flux - Create a Python environment and install

python3 -m venv .venv source .venv/bin/activate pip install -e ".[all]" - Choose and load your model: Use one of the available model variants

python -m flux --name flux-dev --prompt "enter prompt here"

There is also a list of cloud partners in the Github repo:

https://github.com/black-forest-labs/flux

Customizing Your AI Model

Once Flux is installed, you can customise its functionality to suit your needs. Customisation options include:

- Adjusting the number of inference steps for higher quality images or faster generation.

- Modifying the guidance scale to control how closely the images adhere to your prompt.

You can also fine tune the model by providing your own dataset to tailor image generation towards a specific style or content.

None of this I’ve messed with so dig into the docs if you want to get involved.

Prompt Engineering For Flux

Prompt engineering plays a vital role in achieving the desired results from generative AI models like Flux. The model has a good ability to understand complex tasks but it works best with short concise descriptions.

A well crafted prompt includes:

- Specific descriptions The more specific your prompt, the more accurate the generated image will be. For instance, instead of a cat in a garden, try a fluffy orange cat sitting in a vibrant, sunlit flower garden.

- Contextual cues Adding context like “in the style of a 19th century painting” or “photorealistic with natural lighting” helps refine the outcome.

- Iterative refinement There’s no cost per image here (except computational electricity cost and time) so test prompts repeatedly and make minor adjustments to optimise the results.

Ultimately, the art of prompt engineering is about striking the right balance between specificity and creativity to unlock the models full potential.

Hosting your own Flux model gives you unparalleled control over your AI powered projects. Flux’s robust architecture and impressive models make it an ideal choice for generative text-to-image AI. Enjoy.