A practical, repeatable workflow for turning an idea into production-ready code using ChatGPT + Codex, with human review at every critical boundary. This is optimised for developers who want speed without surrendering architectural control.

This workflow separates thinking from typing:

- You own architecture, constraints, and quality bars

- ChatGPT helps crystallise intent into specs

- Codex executes scoped, testable tasks in parallel-safe chunks

- You review, deploy, and iterate with AI as a pair-programmer—not an autopilot

The key insight: AI performs best when it is given narrow, explicit tasks with completion criteria.

Step 1 — High-Level Design With ChatGPT

Goal: Convert a fuzzy idea into a clear technical spec before any code is written.

What to Discuss

- Problem statement

- Non-goals (very important)

- Constraints (language, framework, chain, infra, budget, security)

- Success criteria

- Open questions / assumptions

Example Prompt (Architecture Spec)

You are a senior software architect.

I want to build a [brief product description].

Constraints:

- Language: Rust + Soroban

- Frontend: Next.js

- Target environment: Stellar testnet

- Security: non-custodial, no admin key

- Non-goals: no governance, no upgradeability (for now)

Please produce:

1. A concise problem statement

2. High-level architecture

3. Data models

4. Key contract entrypoints

5. Threat model (top 5 risks)

6. Explicit non-goals

7. Open questions I need to decide

Output You Want

- A written spec, not code

- Something you could hand to another developer

- Clear enough that implementation is mechanical

Do not proceed until this feels right.

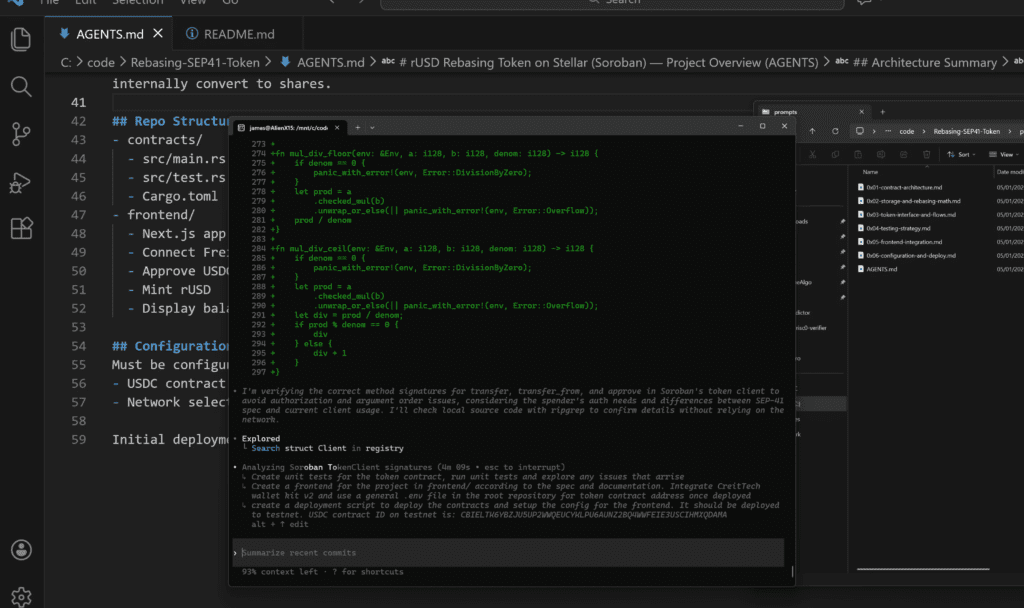

Step 2 — Create an AGENTS.md File

Goal: Define how Codex should behave across the entire repo.

This file becomes the “constitution” for automated coding.

Example AGENTS.md

# AI Agent Instructions

## General Rules

- Do not invent APIs or dependencies

- Prefer simplicity over cleverness

- No breaking changes without explicit instruction

- All code must compile

## Code Style

- Rust: idiomatic, no unsafe unless required

- JS/TS: strict typing, no `any`

- Comments explain *why*, not *what*

## Testing

- Every contract function must have unit tests

- Tests must assert failure cases

- Avoid mocks where possible

## Security

- No unchecked external calls

- Explicit authorization checks

- Fail closed, not open

## Output Rules

- Modify only files specified in the task

- Do not refactor unrelated code

- Explain assumptions at the end of each task

This dramatically improves consistency and reduces hallucinations.

Step 3 — Use Codex to Generate Task Files

Goal: Break the project into small, serialisable tasks with clear completion criteria.

Run Codex locally (WSL works well) using a high-capability coding model.

I’ll also update the ~/.codex/config.toml file with the example from Peter Steinberger

model = "gpt-5.2-codex"

model_reasoning_effort = "high"

tool_output_token_limit = 25000

# Leave room for native compaction near the 272–273k context window.

# Formula: 273000 - (tool_output_token_limit + 15000)

# With tool_output_token_limit=25000 ⇒ 273000 - (25000 + 15000) = 233000

model_auto_compact_token_limit = 233000

[features]

ghost_commit = false

unified_exec = true

apply_patch_freeform = true

web_search_request = true

skills = true

shell_snapshot = true

[projects."/mnt/c/code"]

trust_level = "trusted"Initial Codex Prompt

You are an autonomous coding agent.

Using the provided spec and AGENTS.md:

- Decompose this project into a sequence of tasks

- Each task must be independently executable

- Tasks should build on each other

- Each task should produce a concrete output

Create one markdown file per task named:

tasks/0x01-task.md, 0x02-task.md, etc.

Each task file must include:

- Objective

- Files to modify

- Detailed steps

- Completion criteria

Example Task File (tasks/0x01-task.md)

## Objective

Set up the base Soroban contract with storage layout and skeleton functions.

## Files to Modify

- contracts/src/lib.rs

- contracts/Cargo.toml

## Steps

1. Create contract struct

2. Define storage keys

3. Add placeholder entrypoints

4. Ensure contract compiles

## Completion Criteria

- `cargo test` passes

- No unused imports

- No TODOs left in code

Think of these as mini-specs, not tickets.

Step 4 — Schedule & Execute Tasks Sequentially

Let Codex work in the background while you stay in control.

Best Practices

- Run one task at a time

- Review output before continuing

- Reject or revise tasks aggressively

- Keep diffs small

Execution Prompt Template

Execute tasks/0x02-task.md exactly as written.

Rules:

- Modify only the listed files

- Do not anticipate future tasks

- If something is unclear, state assumptions

- Stop when completion criteria are met

This avoids cascading errors and architectural drift.

Step 5 — Deploy, Test, Snag, Iterate

The next step is to see if it actually works and then fix everything that is meh.

At this stage I also like to carry out a security review using a couple of the leading models. Usually Codex & Claude. Different models catch different classes of issues.

Security Review Prompt

Perform a security review of this Soroban contract.

Assume:

- Adversarial users

- No trusted off-chain components

List:

- Attack vectors

- Severity

- Concrete mitigations

Some notes on AI assisted development

- Specs are leverage

- Tasks are contracts

- AI writes code, you ship systems

- Small scopes beat big prompts

- Review is not optional

If something goes wrong, it’s almost always because:

- The spec was vague

- The task was too large

- Completion criteria were missing

When This Workflow Shines

- New protocols or chains

- Complex smart contracts

- Greenfield projects

- Rapid prototyping with production intent

- Solo developers acting like a small team

Treat AI like a junior developer with infinite stamina and zero intuition. Git it hard boundaries, clear criteria and let it work overtime to create the best products possible.