Protodanksharding and Danksharding are two approaches to scaling the amount of data on-chain for a future version of Ethereum. The goal of both upgrades is to ensure that the data on-chain is made available to archiving parties when it was first posted. This is accomplished through a technique called Data Availability Sampling (DAS).

When & Why

Protodanksharding is scheduled to be rolled out in EIP4844 as part of the Cancun update probably later this year, followed by Danksharding at a later date which will further increase the amount of data available to clients.

Ethereum has been facing scalability issues and network congestion resulting in high transaction fees. To address these problems developers are rolling out a series of updates as part of the ETH2 upgrade

What is Sharding

Sharding is a technique commonly used in centralized database management, where a database is split into smaller parts or shards to increase efficiency and scalability. Similarly, sharding a blockchain network involves splitting the network into distinct shards, with each shard responsible for storing a portion of the chain’s data and handling a unique subset of transactions.

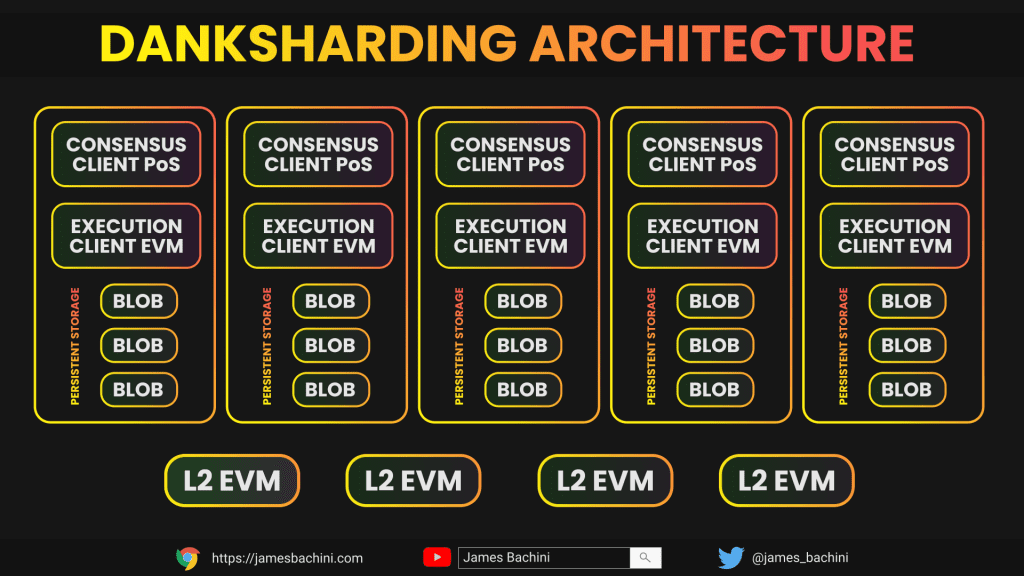

Danksharding is a form of sharding where persistent data storage is split into blobs.

What is ProtoDanksharding

Protodanksharding is an intermediary update on the path towards a full danksharding upgrade. It introduces a new transaction type called a “blob-carrying transaction.” Each blob is a data packet up to 128 KB and a transaction can carry up to four data blobs, adding up to 512 KB of additional data per block.

These data blobs will only be stored for a limited time, and the data will not be directly accessible to smart contracts. Instead, only a short commitment to the blob data, called DATAHASH, will be available to smart contracts.

The architecture provides a rollup-centric approach by introducing simplifications and increasing the space for data blobs. By expanding the capacity and efficiency of data storage on L1 and optimising it for L2’s the network can scale by orders of magnitude.

What is Danksharding

The main idea behind Danksharding is to expand the blobs attached to blocks from 1 in ProtoDanksharding to 64 in full Danksharding.

The Ethereum protocol itself does not interpret these blobs of data, allowing for more space for data storage and processing. Danksharding is expected to increase the data available to clients by 60x over protodanksharding by increasing the maximum number of blobs per block, from 0.5 MB per block to 30 MB.

Danksharding addresses the issue of storing such large amounts of data by dispersing it among validators, each of whom stores only a small subset of the data. However, this presents a challenge of ensuring that the block can be reconstructed at a later time. To accomplish this, the block is encoded into a larger block using erasure coding and broken into overlapping fragments that are sent to each validator. The validator checks that the fragment it received is consistent with the signed commitments that it received from the block builder.

- 💻 Client sends data blobs (transactions or bundles) to a builder

- 🛠️ Builder creates a block and sends pieces of it to validators

- 👥 Block proposer (one of the validators): shares the block on the network

- 🔍 Sampling validator verifies the block header through sampling protocol and signs it

- 🔄 Reconstruction agent rebuilds a previous block by interacting with all validators

Layer 2 Fees

The effect of EIP-4844’s roll out is that fees across layer 1 and particularly L2 rollups will be reduced. Where as now a transaction on Arbitrum or Optimism may cost $0.30, next year it might cost less than $0.05

Combine that with the emergence of various zkRollup L2 networks and I think there will be a strong narrative of moving money around to the latest and greatest L2 ecosystem. Early adopters of new L2 chains will rotate funds and make money as the markets catch up.

This could provide an opportunity similar to the alternate layer 1 DeFi ecosystem explosion which started with the launch of Binance Smart Chain and perhaps ended with the collapse of the Luna/Terra network.

During this period it was very profitable to invest in the governance tokens of leading DeFi products on upcoming chains. Today there is a different paradigm built around cross-chain communications and multichain protocols.

It is difficult to see how alternate layer 1 chains gain traction in a world with low L2 fees. It is equally difficult to see how DeFi forks provide sustainable investment opportunities in a world where Uniswap is on every chain.

The native tokens of L2’s do not have the same supply dynamics as alternate layer 1 chains because the governance tokens are not used as staking rewards to secure the network. On Arbitrum gas fees are paid in Eth which is used to pay parent tx gas fees for L1 proofs. The Arb token lacks utility in this department but it also lacks the main source of distribution, proof of stake rewards. In the case of L2’s governance token distribution comes mainly from early VC deals and team allocations. If a zk L2 does a fair launch or releases a compelling product with limited governance supply then that could be very interesting from an investment perspective.

Eventually I expect the privacy and scaling explorations team which is part of the Ethereum foundation will launch a series of interconnected zk-rollups which will form an official Ethereum L2 and that will perhaps just be known as Ethereum with L1 used solely as a chain of proofs and “legacy transactions”.

Conclusion

Danksharding is a vital component in making Ethereum more scalable, allowing for hundreds of individual rollups and 100,000+ transactions per second.

By breaking the data down into blobs, sharding this across the network and optimising it for layer 2’s Ethereum can scale to meet the expanding utility and demand for the network.

This will make blockchain data storage cheap, fast and efficient and open up new use cases for Solidity developers.

Where blockchain devs have been limited by the limitations of on-chain data, in the future we will be able to use block space in the same way we use databases today.

In this evolving paradigm web3 will be able to compete with web2 client-server technologies and decentralized applications will thrive.